In one of the conversations I’ve had with our senior editor, I was asked the question, "How did the first programmers program?” This led to a discussion about Babbage and Ada Lovelace, at the end of which, I got assigned to researchhow it all began – it being the many firsts in computing history.

I dug into books and websites and was met with many revelations: Babbage isn’t technically the inventor of the computer, FORTRAN most definitely wasn’t the first high level programming language and we used to have styluses for CRT screens (really).

I was also surprised to find out how essential wars were in fueling the rapid progress of computer development, plus the contribution of many women that our textbooks chose to neglect. Hence, to fill the gaps in the history of computers and programs, I’ve put together 20 firsts in the world of computing, from bowling balls to WW2 and all that is in between.

1. First Computer: "Difference engine" (1821)

The "Difference Engine" was a proposed mechanical computer to be used to output mathematical tables. Commissioned by the British government, Charles Babbage (aka Father of Computer) started working on it, but due to its high production cost, the funding was stopped and the machine was never completed.

2. First General Purpose Computer: "Analytical Engine" (1834)

The "Analytical Engine" was also a proposed mechanical computer, the input of which was supposed to be punched cards, with programs and data punched on them. Another brainchild of Charles Babbage, this machine was also not completed.

3. First Computer Program: algorithm to compute Bernoulli numbers (1841 – 1842)

Ada Lovelace (world’s first computer programmer) began translating Italian mathematician Luigi Menabrea’s records on Babbage’s analytical engine in 1841. During the translation she became interested in the machine and left notes with her translation. One of the notes – note G, contained the algorithm to compute Bernoulli numbers by the analytical engine, considered to be the very first computer program.

4. First Working Programmable Computer: Z3 (1941)

Konrad Zuse (the Inventor of Computers) already had a working mechanical computer Z1 but it worked for only few minutes at a time. The use of a different technology – relays, led to Z2 and eventually Z3. Z3 was an electromagnetic computer for which program and data were stored on external punched tapes. It was a secret project of the German government and put to use by The German Aircraft Research Institute. The original machine was destroyed in the bombing of Berlin in 1943.

5. First Electronic Computer: Atanasoff-Berry Computer (ABC) (1942)

Created by John Vincent Atanasoff & Clifford Berry, the hence named Atanasoff-Berry Computer or ABC was used to find the solution for simultaneous linear equations. It was the very first computer that used binary to represent data and electronic switches instead of mechanical. The computer however was not programmable.

6. First Programmable Electronic Computer: Colossus (1943)

The Colossus created by Tommy Flowers, was a machine created to help the British decrypt German messages that were encrypted by the Lorenz cipher, back in World War II. It was programmed by electronic switches and plugs. Colossus brought the time to decipher the encrypted messages down from weeks to mere hours.

7. First General Purpose Programmable Electronic Computer: ENIAC (1946)

Funded by the US Army, ENIAC or Electronic Numerical Integrator And Computerwas developed in the Moore School of Electrical Engineering, University of Pennsylvania by by John Mauchly & J. Presper Eckert. ENIAC was 150 feet wide and could be programmed to perform complex operations like loops; programming was done by altering its electronic switches and cables. It used card readers for input and card punches for output. It helped with computations for feasibility of the world’s first hydrogen bomb.

8. First Trackball: (1946/1952)

Why the two years for the first trackball? Allow me to explain.

The first year was the year given by a Ralph Benjamin, who claimed to have created the world’s first trackball back when he was working on a monitoring system for low-flying aircraft in 1946. The invention he described used a ball to control the X-Y coordinates of a cursor on screen. The design was patented in 1947 but was never released because it’s considered a "military secret". The military opted for the joystick instead.

The second contender for world’s first trackball, used in the Canadian Navy’s DATAR system back in 1952 was invented by Tom Cranston and co. This trackball design had a mock up which utilized a Canadian bowling ball spun on "air bearings" (see image below).

9. First Stored-Program Computer: SSEM (1948)

To overcome the shortcomings of delay-line memory, Frederic C. Williams and Tom Kilburn had developed the first random-access digital storage device based on the standard CRT. The SSEM (Manchester Small-Scale Experimental Machine) was used to implement that storage device for practical usage. The programs were entered in binary form using 32 switches and its output was a CRT.

10. First High-Level Programming Language: Plankalkül (1948)

Although Konrad Zuse started working on Plankalkül since 1943, it was only in 1948 when he published a paper about it. It did not attract much attention unfortunately. It would take close to three decades later for a compiler to be implemented for it, one created by a Joachim Hohmann in a dissertation.

11. First Assembler: "Initial Orders" for EDSAC (1949)

Assembler is a program that converts mnemonics (low-level) into numeric representation (machine code).

The initial orders in EDSAC (Electronic Delay Storage Automatic Calculator) was the first of such a system.

It was used to assemble programs from paper tape input into the memory and running the input.

The programs were in mnemonic codes instead of machine codes, making “initial code” the first ever assembler by processing a symbolic low level program code into machine code.

The initial orders in EDSAC (Electronic Delay Storage Automatic Calculator) was the first of such a system.

It was used to assemble programs from paper tape input into the memory and running the input.

The programs were in mnemonic codes instead of machine codes, making “initial code” the first ever assembler by processing a symbolic low level program code into machine code.

12. First Personal Computer: "Simon" (1950)

"Simon" by Edmund Berkeley was the first affordable digital computer that could perform four operations: addition, negation, greater than, and selection. The input was punched paper, and the program ran on paper tape. The only output were through five lights.

13. First Compiler: A-0 for UNIVAC 1 (1952)

A compiler is a program that converts high-level language into machine code. The A-0 System was a program created by the legendary Grace Hopper to convert a program specified as a sequence of subroutines and arguments into machine code. The A-0 later evolved into A-2 and was released to customers with its source code making it possibly the very first open source software.

14. First Autocode: Glennie’s Autocode (1952)

An Autocode is a high-level programming language that uses a compiler. The first autocode and its compiler appeared at the University of Manchester to make the programming of the Mark 1 machine more intelligible. It was created by Alick Glennie, hence the name Glennie’s Autocode.

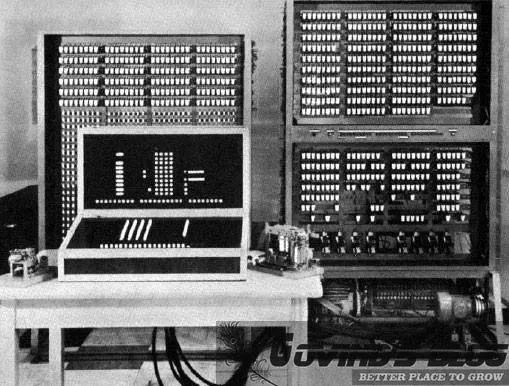

15. First Real-Time Graphics Display Computer: AN/FSQ-7 by IBM (1951)

AN/FSQ-7 was based on one of the first computers that showed real-time output, Whirlwind. It became the lifeline for the US Air Defense system known as Semi-Automatic Ground Environment (SAGE). The computers showed tracks for the targets and automatically showed which defences were within range. AN/FSQ-7 had 100 system consoles; here’s one (image below), the OA-1008 Situation Display (SD), with a light gun used to select targets on screen for further information.

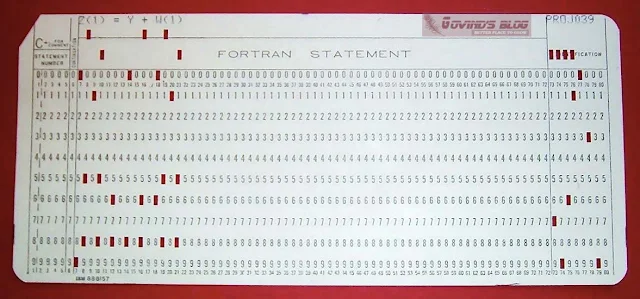

16. First Widely Used High Level Programming Language: FORTRAN (1957)

If you check the textbooks, you will find FORTRAN listed as the first high level programming language. Thought up by John W. Backus who disliked writing programs and decided to create a programming system to help make the process much easier, the use of FORTRAN greatly reduced the number of programming statements required to get a machine running. By 1963, more than 40 FORTRAN compilers were already available.

17. First Mouse (1964)

It was while sitting in a conference session on computer graphics that the idea of a mouse came to Douglas Engelbart in 1964. He thought up a device with a pair of small wheels (one turns horizontally the other vertically) which can be used to move a cursor on a screen. A prototype (see below) was created by his lead engineer, Bill English but both English and Engelbart never received royalties for the design because technically, it belonged to SRI, Engelbert’s employer.

18. First Commercial Desktop Computer: Programma 101 (1965)

Also known as Perottina, Programma 101 was the world’s first commercial PC. It could perform addition, subtraction, multiplication, division, square root, absolute value, and fraction. For all that it could do, it was priced at $3,200 (it was a very different time) and managed to sell 44,000 units. Perottina was invented by Pier Giorgio Perotto and produced by Olivetti, an Italian manufacturer.

19. First Touchscreen (1965)

It doesn’t look like much but this was the first touchscreen the world has ever known. It’s a capacitative touchscreen panel, with no pressure sensitivity (there’s either contact, or no contact) and it only registers a single point of contact (as opposed to multitouch). The concept was adopted for use by air traffic controllers in the UK up until the 1990s.

20. First Object Oriented Programming Language: Simula (1967)

Based on C. A. R. Hoare’s concept of class constructs, Ole-Johan Dahl & Kristen Nygaard updated their "SIMULA I" programming language with objects, classes and subclasses. This resulted in the creation of SIMULA 67 which became the first object-oriented programming language.

Final thoughts

As much as this post was about what we could learn about the many firsts in computing history it is hard to immerse ourselves in history itself. As a result, at least for me, we become more appreciative about the work done by generations before ours, and we can better understand what drives the many changes that shape the world that we live in today.

I hope this post inspires you as much as it inspired me. Share your thoughts on these firsts, and if I missed any, which I’m sure I did, do add them in the comments.